Callback#

A Callback class can be used to receive a notification of the algorithm object each generation. This can be useful to track metrics, do additional calculations, or even modify the algorithm object during the run. The latter is only recommended for experienced users.

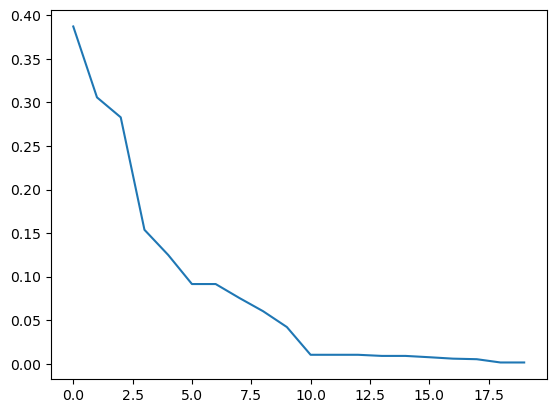

The example below implements a less memory-intense version of keeping track of the convergence. A posteriori analysis can on the one hand, be done by using the save_history=True option. This, however, stores a deep copy of the Algorithm object in each iteration. This might be more information than necessary, and thus, the Callback allows to select only the information necessary to be analyzed when the run has terminated. Another good use case can be to visualize data in each

iteration in real-time.

Tip

The callback has full access to the algorithm object and thus can also alter it. However, the callback’s purpose is not to customize an algorithm but to store or process data.

[1]:

import matplotlib.pyplot as plt

import numpy as np

from pymoo.algorithms.soo.nonconvex.ga import GA

from pymoo.problems import get_problem

from pymoo.core.callback import Callback

from pymoo.optimize import minimize

class MyCallback(Callback):

def __init__(self) -> None:

super().__init__()

self.data["best"] = []

def notify(self, algorithm):

self.data["best"].append(algorithm.pop.get("F").min())

problem = get_problem("sphere")

algorithm = GA(pop_size=100)

res = minimize(problem,

algorithm,

('n_gen', 20),

seed=1,

callback=MyCallback(),

verbose=True)

val = res.algorithm.callback.data["best"]

plt.plot(np.arange(len(val)), val)

plt.show()

=================================================================

n_gen | n_eval | f_avg | f_min | f_gap

=================================================================

1 | 100 | 0.8402330805 | 0.3110080067 | 0.3110080067

2 | 200 | 0.5840619254 | 0.2608389362 | 0.2608389362

3 | 300 | 0.4311230739 | 0.2141791742 | 0.2141791742

4 | 400 | 0.3256989200 | 0.1747990986 | 0.1747990986

5 | 500 | 0.2528832854 | 0.1090437159 | 0.1090437159

6 | 600 | 0.1957895271 | 0.0883593233 | 0.0883593233

7 | 700 | 0.1498475068 | 0.0723121564 | 0.0723121564

8 | 800 | 0.1147224003 | 0.0385041510 | 0.0385041510

9 | 900 | 0.0886857837 | 0.0321901509 | 0.0321901509

10 | 1000 | 0.0669225385 | 0.0278150867 | 0.0278150867

11 | 1100 | 0.0479183842 | 0.0258741635 | 0.0258741635

12 | 1200 | 0.0371069207 | 0.0169932013 | 0.0169932013

13 | 1300 | 0.0298104627 | 0.0169932013 | 0.0169932013

14 | 1400 | 0.0251838079 | 0.0163479517 | 0.0163479517

15 | 1500 | 0.0217146006 | 0.0118196679 | 0.0118196679

16 | 1600 | 0.0175706067 | 0.0070060442 | 0.0070060442

17 | 1700 | 0.0140096409 | 0.0053171551 | 0.0053171551

18 | 1800 | 0.0109934002 | 0.0052887786 | 0.0052887786

19 | 1900 | 0.0088322394 | 0.0045948657 | 0.0045948657

20 | 2000 | 0.0072640947 | 0.0040418655 | 0.0040418655

Note that the Callback object from the Result object needs to be accessed via res.algorithm.callback because the original object keeps unmodified to ensure reproducibility.

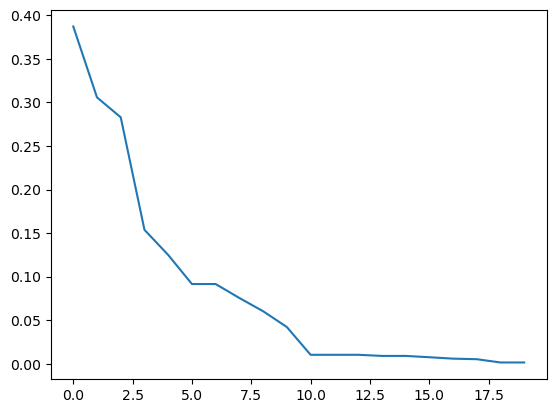

For completeness, the history-based convergence analysis looks as follows:

[2]:

res = minimize(problem,

algorithm,

('n_gen', 20),

seed=1,

save_history=True)

val = [e.opt.get("F")[0] for e in res.history]

plt.plot(np.arange(len(val)), val)

plt.show()